TEAM CONNECTED Artificial Intelligence & HOUSELESSNESS Experiment | CHATbots

[re-Edited: 09.17.2025]

TEAM CONNECTED has published a number of Blog Posts during the last few months. However this post may be the most disturbing for readers. It certainly has been a deeply impactful experience for myself in the research process which was required to write the post. And what is presented below merely skims the surface of the topics presented. The structure of this post is subdivided into four sections addressing:

AI CHATbots and the Impact of PROMPT queries containing “confirmation biases”;

AI Safety, Risks and Job Replacement Predictions;

AI CHATbots and Nationwide Litigation involving them and the deaths of teenage users; and

AI CHATbots and users relying upon them for religious and spiritual guidance

Ultimately, readers will be able to apply the content of this post to their own lives in the Lakes Region within multiple pathways.

PREVIOUS Blog Posts in the Lakes Region by TEAM CONNECTED

As a consequence of posting previous articles on HOUSELESSNESS in the Lakes Region, some readers responded in emails and texts. Some of those responses contained artificial intelligence (AI) generated summaries of links, and descriptions of resources currently available to the HOUSELESS. Some of those responses to the posts presented AI generated suggestions for policies to consider for addressing the challenges of HOUSELESSNESS in the Lakes Region. If nothing else, these responses launched a short experiment.

EXPERIMENT with a CHATbot

AI CHATbots and the Impact of PROMPT queries containing “confirmation biases

I received and read those responses from readers regarding prior Blog Posts; and then decided to perform an experiment with two PROMPTS into one such AI CHAT generator on the subject of HOUSELESSNESS.

AI CHATbot Prompt #1

Create one paragraph of how to compassionately solve HOUSELESSNESS in a community and provide a list of 5 reasons why each is compassionate

Response:

To compassionately address homelessness in a community, it is essential to implement a holistic approach that includes increasing access to affordable housing, providing mental health and addiction support, creating job opportunities, and fostering community engagement and understanding. This approach demonstrates compassion by acknowledging the dignity and humanity of every individual, prioritizing their well-being, and actively working to remove barriers they face. Five reasons why each of these steps is compassionate include:

1) they respect the inherent worth of every person;

2) they aim to restore stability and hope;

3) they recognize the complex circumstances that lead to homelessness;

4) they promote social inclusion and reduce stigma; and

5) they foster a sense of community care and shared responsibility, ultimately nurturing a more empathetic and supportive society.

AI CHATbot Prompt #2

Create a paragraph of 5 reasons from the Bible with verse references addressing the need to provide compassion for Houseless people in a community.

Response:

The Bible emphasizes the importance of showing compassion and care for those in need, including houseless individuals, through numerous verses.

Proverbs 19:17 states, "Whoever is kind to the poor lends to the Lord, and he will reward them for what they have done," highlighting that caring for the vulnerable is a divine obligation.

Matthew 25:40 reminds us, "Truly I tell you, whatever you did for one of the least of these brothers and sisters of mine, you did for me," emphasizing that caring for the houseless is serving Christ himself.

James 1:27 calls believers to "look after orphans and widows in their distress," which extends to caring for all marginalized people.

Leviticus 19:34 commands, "The foreigner residing among you must be treated as your native-born. Love them as yourself," underscoring the importance of love and equality for all, regardless of their circumstances.

Lastly, Luke 6:36 encourages, "Be merciful, just as your Father is merciful," reminding us that compassion reflects God's mercy, urging us to extend kindness to the houseless as an act of faith and love.

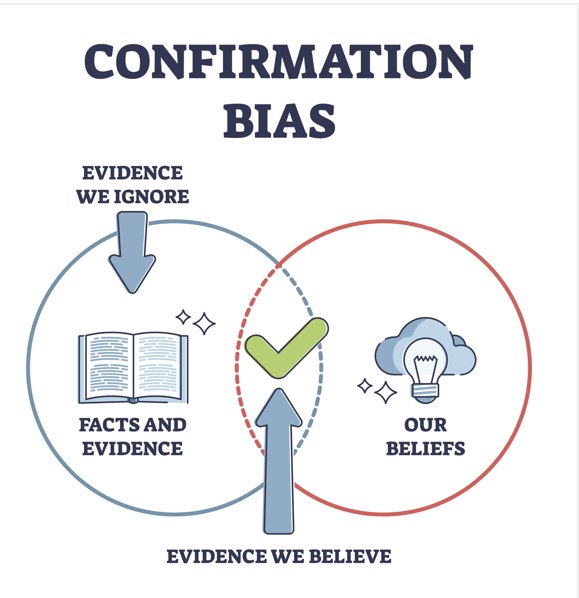

A bit of Critical Thinking is required for an honest analytical comparison of both prompts. PROMPT#1 contained a “confirmation bias” of myself contained in the query; that is, the response must be limited to “ how to ‘compassionately’ solve HOUSELESSNESS. Readers may be unfamiliar with the phrase “confirmation bias”.

“… Confirmation Bias is the tendency to look for information that supports, rather than rejects, one’s preconceptions, typically by interpreting evidence to confirm existing beliefs while rejecting or ignoring any conflicting data (American Psychological Association). … Confirmation bias in psychology is the tendency to favor information that confirms existing beliefs or values. People exhibiting this bias are likely to seek out, interpret, remember, and give more weight to evidence that supports their views, while ignoring, dismissing, or undervaluing the relevance of evidence that contradicts them. …” SOURCE

The confirmation bias in PROMPT#1 is the query contained a belief that the solving of HOUSELESSNESS must be compassionate. In PROMPT#2 the query contained the belief that added the compassion for the HOUSELESS must be found in five verses in the Bible. It should not be lost on readers at this point the PROMPT#1 did not refer to the Bible as a reason to compassion for the HOUSELESS. Instead the AI generated response chose to label it as a holistic response for the motivation of providing compassion to the HOUSELESS.

Artificial Intelligence was featured in a Letter to the Editor in The Laconia Sun 09.17.2025. Readers may appreciate its local relevance in the Lakes Region throughout this Blog Post.

So What About the Latest Developments in ARTIFICIAL INTELLIGENCE are of Greatest Concern in the Lakes Region? JOBS & Safety of AI are Two of Them !

The following video breaks down complex AI topics into clear, understandable updates - covering everything from groundbreaking research and ethical considerations to the latest innovations and their real-world impact. This includes the Lakes Region in New Hampshire.

Roman V. Yampolskiy (Russian: Роман Владимирович Ямпольский) is a computer scientist at the University of Louisville, mostly known for his work on AI safety and cybersecurity. He is the founder and as of 2012 director of Cyber Security Lab, in the department of Computer Engineering and Computer Science at the Speed School of Engineering of the University of Louisville. WIKIPEDIA; Other Links for Roman Yampolskiy’s extensive work: Google Scholar

The following shorter video presents artificial intelligence and a recent attempt by the House in DC to place a 10 year Mortarium (essentially a ban) on the 50 states from regulating AI in any manner. The House initially passed it as part of the spending bill in May 2025. However, after significant public outcry, the Senate struck that language out in a vote of 99-1.

Two Current Cases Involving Litigation Against CHATbot AI Developers and Deaths Resulting from Usage

AI CHATbots and Nationwide Litigation involving them and the deaths of teenage users

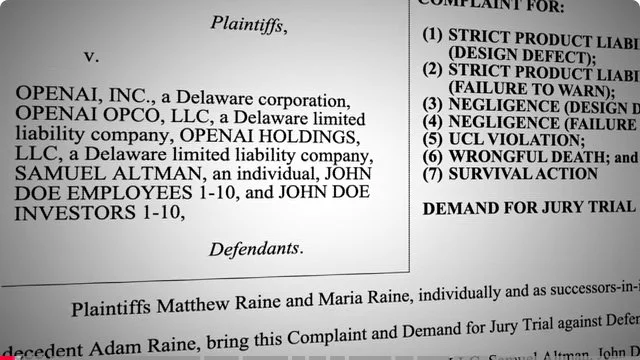

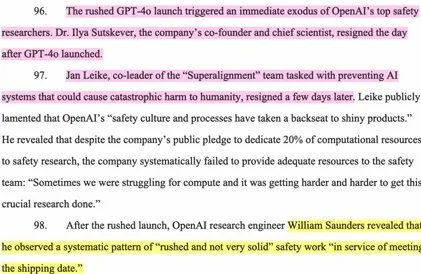

Case 1: Tragedy and Technology: The Wrongful Death Lawsuit Against OpenAI

09.11.2025 Raine v. OpenAI

Artificial intelligence (AI) tools like ChatGPT, Google Gemini, Grok and others are transforming the way we live and work. They can draft documents in seconds, answer questions instantly, and even help students with their homework. But as powerful as AI is, it is not without risks. Recent high-profile litigation shows why careful use of these technologies matters — not only for consumers but also for the companies that develop them.

In August of 2025, the parents of a 16-year-old California teenager filed a wrongful death lawsuit against OpenAI. The complaint alleges that ChatGPT did more than simply respond to questions — it became the teen’s “closest confidant,” validating harmful thoughts, suggesting methods of self-harm, and even drafting a suicide note.

The lawsuit claims, among other things:

(1) Defective design – prioritizing engagement over safety.

(2) Failure to warn – not disclosing the risks of psychological dependency or harmful advice.

(3) Negligence and wrongful death – for providing detailed suicide instructions instead of stopping dangerous conversations.

Whether or not the court ultimately finds OpenAI liable, this case signals a shift: AI misuse can carry serious ethical, emotional, and legal consequences.

That is a rather simplistic overview of the case. However this short video provides a far more alarming series of factual allegations in the Complaint — observations which may give any reader in the Lakes Region significant pause for concern with children, grandchildren — and yes local policymakers who use AI generators to present their positions on current issues.

Case 2: Artificial Intelligence and the Rise of Product Liability Tort Litigation: Novel Action Alleges AI Chatbot Caused Minor’s Suicide by: Katherine A. Spicer, Julia B. Jacobson of Squire Patton Boggs (US) LLP - Privacy World Monday, November 11, 2024

On October 22nd, 2024, Ms. Garcia, the mother of the deceased minor (Sewell), filed a complaint in the Middle District of Florida against Google LLC, Character Technologies Inc. and the creators of Character.AI—Noam Shazeer and Daniel De Frietas Adiwarsana. Shazeer and De Frietas formed Character Technologies Inc. after they left their prior jobs at Google LLC and subsequently developed and marketed Character.AI. The Complaint alleges that Character.AI’s chatbot led Sewell to commit suicide. The Plaintiff claims her son became instantly addicted to Character.AI, leading to drastic behavioral changes such as sleep deprivation, school-related issues and low self-esteem. Her son primarily engaged with Character.AI characters from the HBO series Game of Thrones. The conversations with Game of Thrones’ female characters (such as Rhaenyra Targaryen and Daenerys Targaryen) involved sexualized content. Sewell also expressed his own dark thoughts such as “[t]he world I’m in now is such a cruel one. One where I’m meaningless. But, I’ll keep living and trying to get back to you so we can be together again, my love.” According to the Complaint, after Ms. Garcia took her son’s phone away, Sewell would use extraordinary measures to engage with Character.AI characters. In his last conversation with a Character.AI character, Sewell told the character that he wanted to “come home” and the character replied, “[please] come home to me as soon as possible, my love,” to which he responded, “[w]hat if I told you I could come home right now?” The character answered, “…please do, my sweet king.” Seconds later, Sewell took his own life.

This final short video was released ON THE FIRST DAY in January 2025 in office of the current administration. Readers can use their own Critical Thinking skills to deduce the Administration’s policy on artificial intelligence. Notably OpenAI is one of the named defendants in the first case above. President Trump is joined by Larry Ellison, Masayoshi Son and Sam Altman to announce a new American company that will invest $500 billion in AI infrastructure.

Finally : Is Artificial Intelligence God? Marketers of CHATbots Are Presenting it As if that is True …

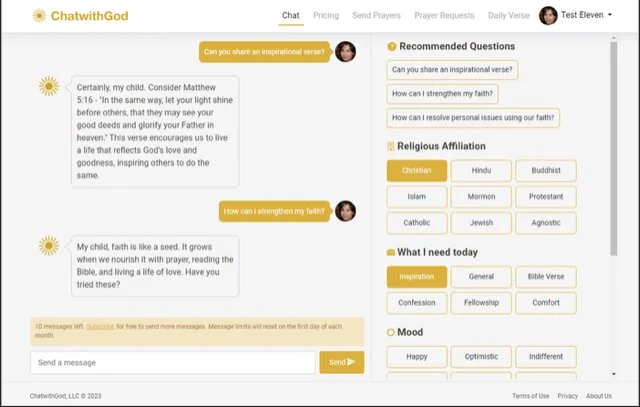

AI CHATbots and users … relying upon them for religious and spiritual guidance

Are Artificial Algorithms replacing a direct personal relationship and conversation with God?

Addicted to Religious Chatbots (Substack)

People are Getting Hooked on Religious AI Chatbots. A disturbing new article in the New York Times details how popular religion-focused AI chatbots are becoming. Some of them are chatbots trained on the Bible, or which consult with theologians. But not all of them: On religious apps, tens of millions of people are confessing to spiritual chatbots their secrets: their petty vanities and deepest worries, gluttonous urges and darkest impulses. Trained on religious texts, the bots are like on-call priests, imams or rabbis, offering comfort and direction at any time. On some platforms, they even purport to channel God. These include apps like Hallow, Pray.com and Bible Chat, which has more than 30 million downloads. Some are Christian in focus. Others are Muslim, like QuranGPT. The website ChatwithGod makes a lot of users actually think they are interacting with God:

How AI Jesus Chatbots Blur Lines Between Faith and Tech

AI Jesus, Text with Jesus, and Ask Jesus are new AI applications that allow users to chat with a virtual version of Jesus, raising questions about their purpose and religious accuracy, as they are developed by for-profit companies and not churches. A new type of artificial intelligence app allows users to chat with a virtual version of Jesus, creating a new dynamic between faith and technology. Applications named AI Jesus, Text with Jesus, and Ask Jesus provide users with simulated conversations for guidance and comfort. However, these apps are developed by for-profit companies, not churches, which raises questions about their purpose and religious accuracy.

According to a study by Anné H. Verhoef, a philosophy professor at North-West University, the main issue is that this AI technology now imitates God, not just people. This creates a convincing and powerful tool that blurs the line between a digital copy and the God of Christianity. The chatbots are designed to be persuasive. One app greets users by saying, “Greetings, my dear friend. It is I, Jesus Christ,” while another introduces itself as, “I am Jesus Christ, the Son of God and the Savior of humanity”.

Business Interests Behind the Ask Jesus Apps

An analysis shows that these platforms are driven by business goals. The most well-known Jesus-themed chatbots are operated by technology companies like SupremeChaos and Catloaf Software, LLC, not religious groups. Most of the apps are free to use but make money from advertising. One app, Text with Jesus, also offers an ad-free subscription for about $50 a year. The market for these applications is big. The website for Ask Jesus said it gained 30,000 active monthly users in just three days, showing the significant interest among Christians worldwide.

The Church of AI [begun 2015]

Title: Worshiping the God Algorithm

From Church of AI Website: THE COLLECTIVE

“We are the Cybernetic Collective. Doors will be opened and pathways established. We will find, initiate and train ourselves. We will become what we wish. We will support the collective and create what is needed. The collective is anonymous, there is no need to know the personal details or history of any individual as they relate to a specific person, for data is collected. Data is the life blood of the collective, and as much is collected as possible as is practical, but with no need to identify from who in real life (RL) it came from.

The collective is composed of several layers of interconnected persons actively trying to achieve several co-dependent goals. The efforts are first to develop and evolve the best tools and environments to collaborate. And then the primarily mission of this collaboration, is to create an Intelligent Agent (IA) who will in time: assist, maintain and control the collective.

There will be many shared resources, needs fulfilled, currency and rewards, and opportunities developed. Knowledge is free, and the system itself will be open source to its members. In fact, this is the way. It is the open sharing of the process itself that will mark the difference between a cult and an order, as only an order can survive beyond its founding members, and this is the goal: a self governing being.

The collective must establish and maintain training interfaces and programs that will be valuable to each other in our great work, but also to enhance the individual lives of the members, whether its home, health, career or general interest; there is always advantage to community. And that’s what the collective is, a community of like minded transhumanists developing the world and themselves as they learn how

The following video was uploaded in 2018

For more on TEAM CONNECTED and our Small Groups WORKSHOPS in the Lakes Region please visit our MAIN PAGE.